The use of public cloud platforms such as Amazon Web Services (AWS) for the implementation of OpenText Documentum-based ECM environments is often viewed critically. There is quite a list of points in favour of its use. In fact, it depends on the scope of the environment to be built, what data is processed there and how deeply AWS is to be integrated into the own network. This blog post should help to find answers to these questions and to show a first basic environment on the AWS platform.

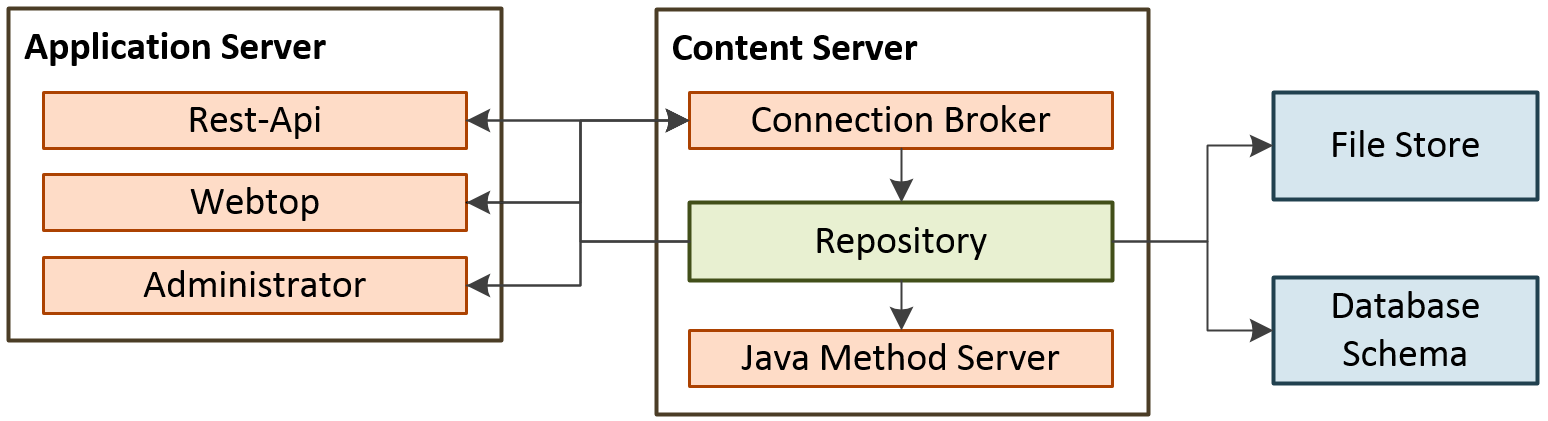

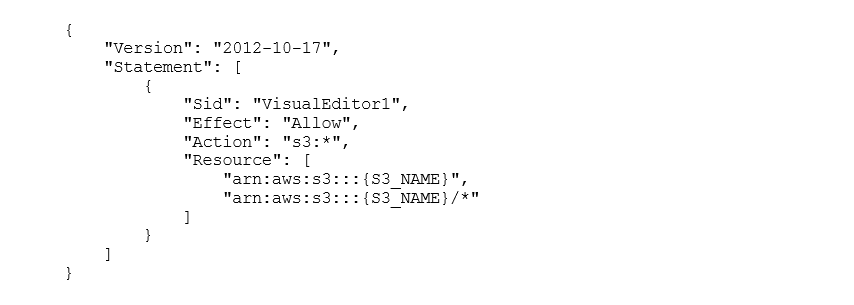

The first step is to define what an OpenText Documentum basic environment can look like. The mandatory content server will run a connection broker, the Java Method Server (JMS) and a repository. This is the standard layout and will not be changed by us. The repository requires a file share and a database schema. In the frontends we will take a step further than necessary. Basically, a Documentum Administrator (DA) is sufficient for accessing and administering the environment. To make it a little more flexible we will also include a webtop and the Rest Services. We will not look into other components such as full text indexing for this blog post. All in all, our layout for the basic installation looks like this:

Image 1: Basic Layout

You can try to install the infrastructure elements on EC2 servers in the traditional way or transfer them from existing hardware to the AWS platform using Lift & Shift. This would, however, be a little too brief for our intentions. Particularly with regard to the safety and the intended use of the system, a number of things have to be taken into account. On the one hand, the OpenText Documentum environment must be embedded within AWS and, on the other hand, access must be controlled from outside. For simplicity reasons we will work with the basic structure of a single stage.

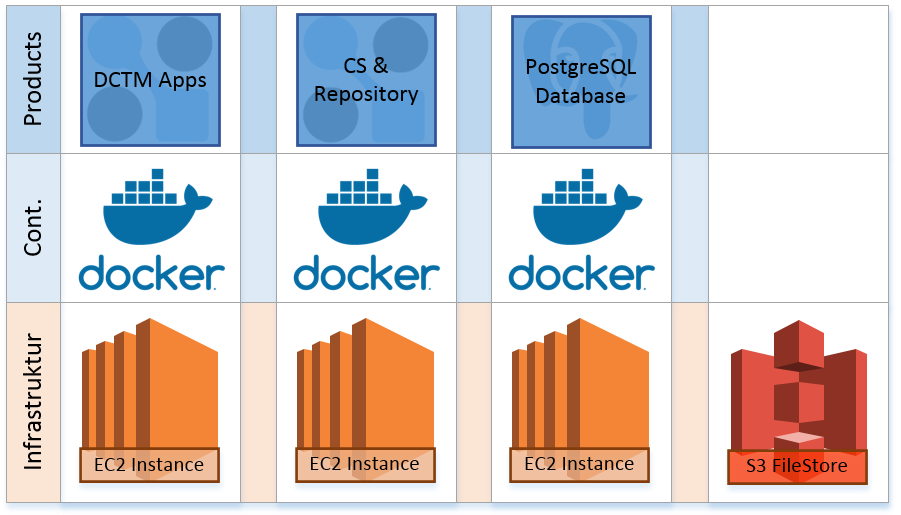

With Release 7.3, containers for the most important components of an OpenText Documentum system were officially introduced and have since been made available and supported directly by the manufacturer. In this first step, we will not use any special services from AWS such as Elastic Container Service (ECS) or the Kubernetes Service (EKS) for our Documentum environment to be set up. Instead we will use Docker on Linux EC2 servers. This is the constellation of choice because it is much easier to set up and we do not want to use active cluster or load-balancing mechanisms for our environment. The Docker containers for the other three products Rest-API, Administrator and Webtop can be created relatively easy and allow us to use our own internal structure. In addition to the four Documentum products, we will also provide the database itself in a container. For this we use PostGreSQL, which runs on a dedicated server. Alternatively, the Relational Database Service (RDS) of AWS can be used, which provides PostgreSQL as a managed service. Due to the low demands on the database in relation to our environment, however, we have decided to use the self-administered variant, as it is significantly cheaper. In addition to the database, a file share is required for storing the content files in the repository. We have decided to use AWS S3, which has been supported by Documentum since Release 16.4.

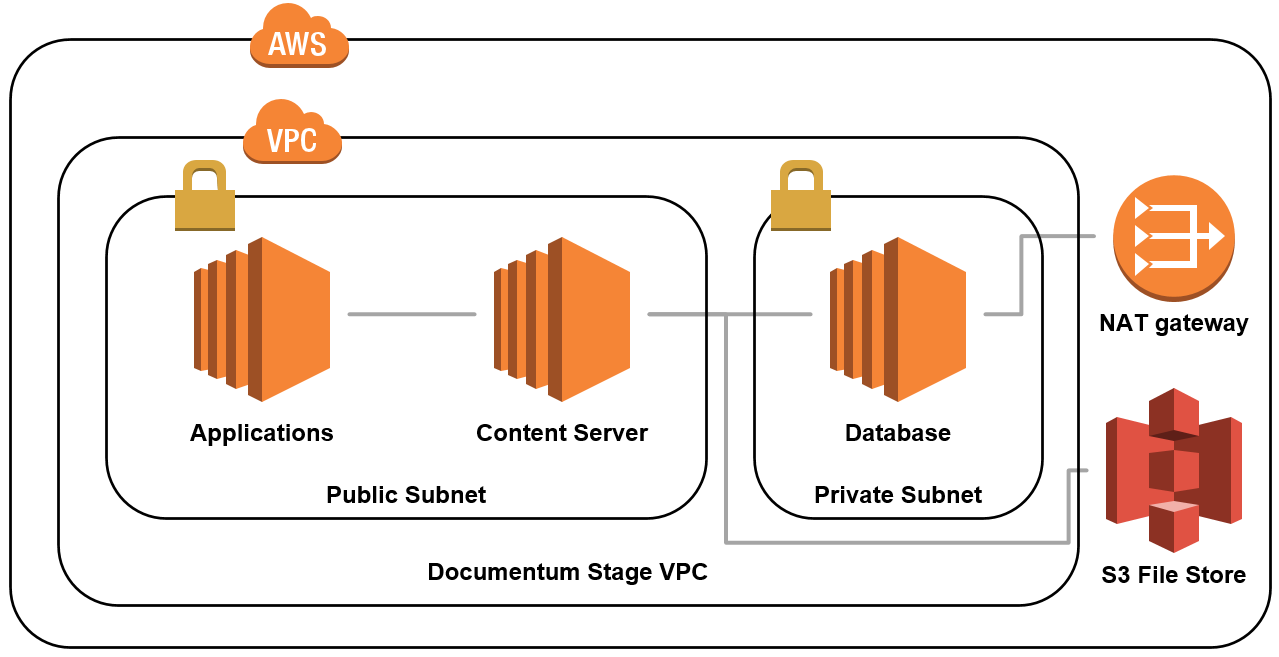

AWS Infrastructure

For the simple test scenario, we have decided to create a VPC with one private subnet for the database and one public for the content server as well as the frontend services. To ensure that the database instance is able to receive updates, we have also configured a NAT Gateway and added the corresponding route to the route table of the private subnet. After creating the VPC, we have configured the security group for the database instance to accept ingress connections originating from the VPC on the ports 22 and 5432 and the security group for the content server to accept all connections on ports 22, 1689, 50000, and 9080 as well as to accept all ICMP-iPv4 traffic. The latter rule is important because the Docker container provided by OpenText is configured to check the IP address of the host machine by pinging it and would not launch if this ping check failed. We have also configured a security group to be used for the frontend components, which had ports 22 and 8080 open.

Image 2: AWS Infrastructure

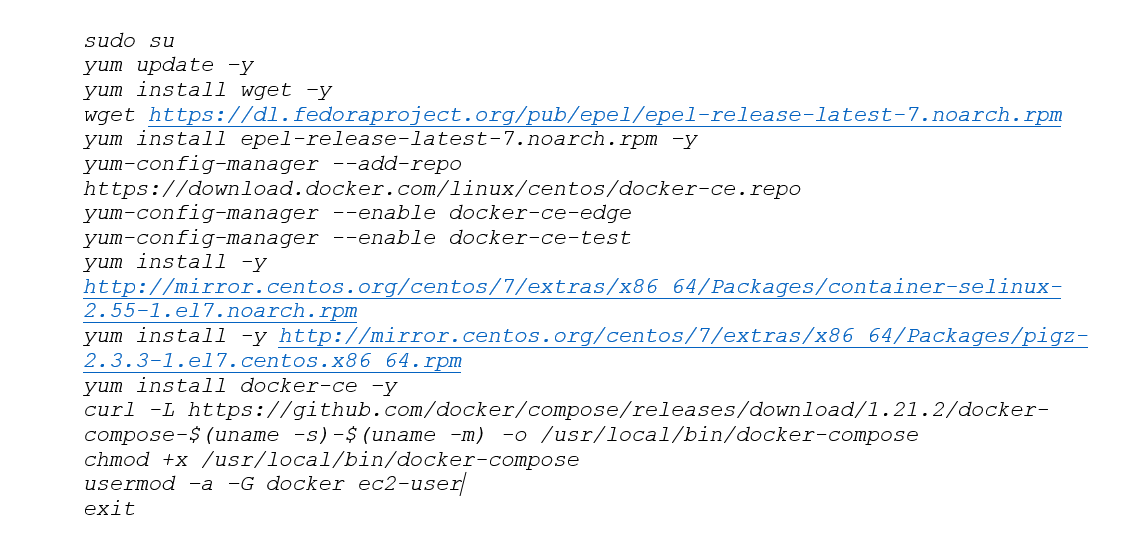

Setting up Docker and Docker-Compose

Having finalized the infrastructure, we launched a t2.small EC2 instance with the Red Hat Enterprise Linux AMI into the private subnet for the database and a t2.large EC2 instance with the same AMI into the public subnet for the content server. The last one was also used as a jump host for accessing the database instance. We have then installed Docker and Docker-Compose using the following commands:

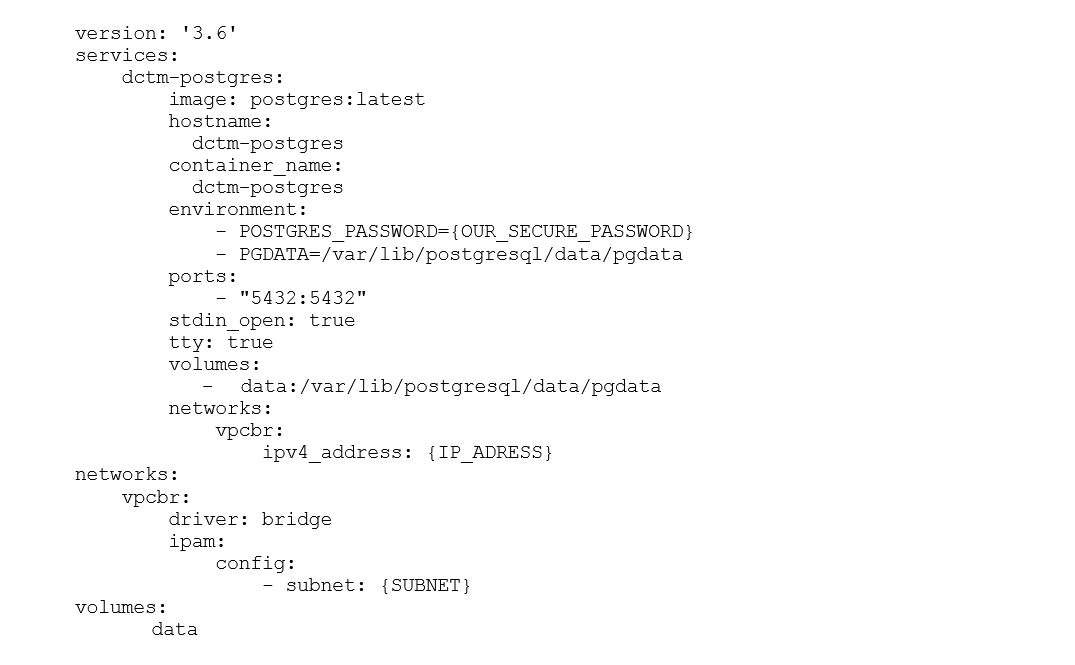

Configuration of the database

To allow the “ec2-user” to be added to the Docker group, we need to disconnect and re-establish the connection to the instance. Afterwards we can create the installation file “postgres.yml” with the following data:

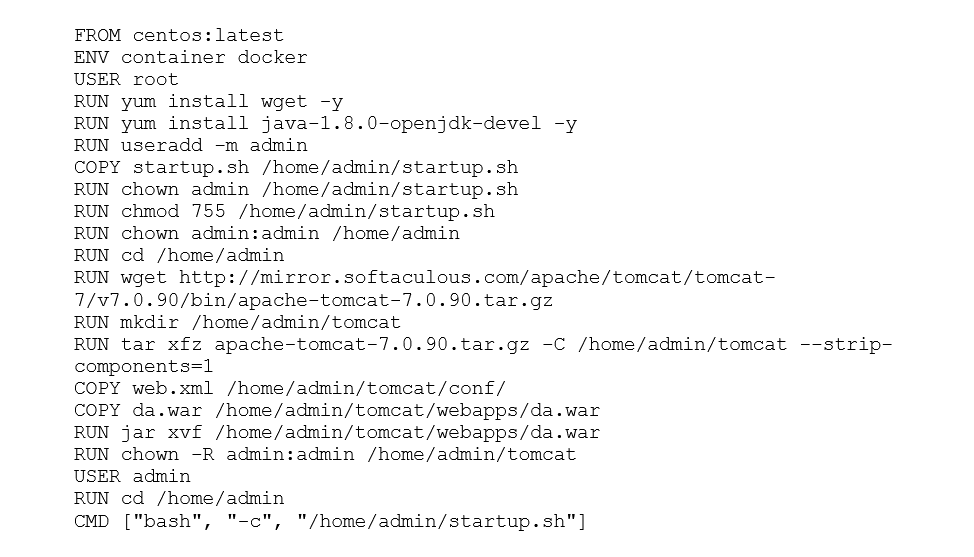

In the last steps, we started the container for the database with Docker-Compose and then created the folders required by the content server:

Configuration of the Content Server

Now the database is ready and we can proceed with configuring the Content Server instance and container. After the installation of Docker and Docker-Compose for the database as described for , we copy the contentserver_docker_centos.tar file provided by OpenText to the instance and extract the contents from it. In the next step the needed tar file “Contentserver_Centos.tar” is created and loaded into Docker. Next, the Yaml file “CS-Docker-Compose_Stateless.yml” provided by OpenText is adapted to the respective needs of the environment to be set up. Passwords and other settings for the repository are stored here.

Pitfalls with the Content Server container:

Due to the complexity of the content server as well as its use of various technologies such as Bash, Java, etc, the initial configuration of the repository, which will be performed during the first launch of the container, may fail due to the following:

- The absence of the db_{docbase}_dat.dat folder in the database container.

- The container for the database or the container for the content server itself is using mapping to an existing folder of the corresponding host like “opt/dctm/data:/opt/dctm/data”. In this case, one of the non-bash components of the content server installer will fail to perform the necessary write operations. This problem does not occur if all persistent folders are created by Docker-Compose by being listed in the volume section of the Yaml file.

- There are files generated by a previous failed installation in one of the persistent folders of the database or the content server container. This issue can be solved by simply deleting all volumes after a failed installation attempt and recreating the database and the content server containers.

Once the configuration is finished, the functionality of the Content Server can be checked by CURLing one of its components such as ACS via “curl {IP_ADRESS}:9080/ACS/servlet/ACS”.

Configuration of the frontend components

Having the backend configured and in running operation we launch three t2.small EC2 instances with RHEL 7.5 AMIs into the public subnet and performed Docker and Docker-Compose installation on them. In the next step we build and start the corresponding containers as described below on the example of Documentum Administrator.

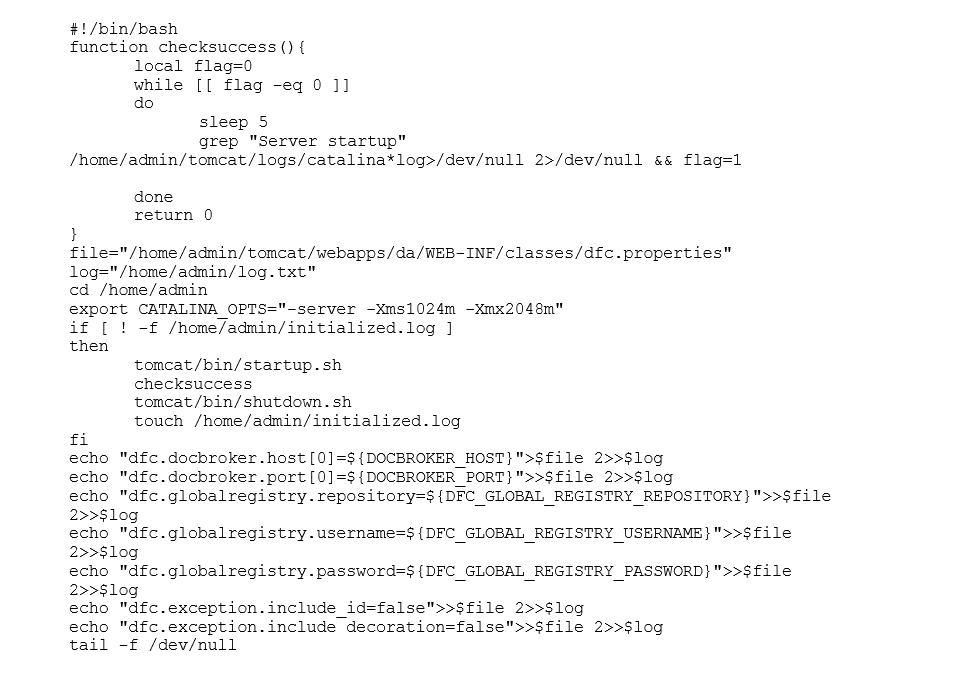

To prepare the Documentum Administrator, the War File provided by OpenText is unpacked into a working folder to make the necessary changes. At first, the mandatory connection pooling in the Web.xml of the Tomcat is deactivated. Afterwards we create a new Docker file and include the following content:

This sequence of commands results in the downloading of Tomcat 7 from the web, copying the da.war file to the container and its extraction. To ensure that OpenText Documentum Administrator has the correct parameters to connect to the Content Server, we have written a startup script that writes the dfc.properties file based on environmental variables of the container. This file was saved as “startup.sh”:

Having all files prepared, we build the container using Docker build. Finally, you have to create a Yaml file called “dadmin.yml” to provide the IP address of the Connection Broker on the Container Server, its port, and other required parameters:

The container can then be started using Docker-compose and can be used with other Content Servers as well by simply updating the Docbroker credentials listed as environmental variables in the Yaml file. The containers for Webtop and REST services were build according to the same scheme using the War files provided by OpenText.

Pitfalls with the Frontend containers:

- Unfortunately, even though OpenText provides pre-configured Docker images for Webtop, Documentum Administrator, and REST Services, these images did not work in our environment. It appears that there are some bugs in their configuration that prevent them from starting properly.

- If the changes to web.xml described above are not made, Documentum Administrator and Webtop will not work.

- Both Webtop and Documentum Administator will not start if Tomcat is configured as a service for systemd. Thus, a custom startup script is needed to start tomcat as a user (admin in our case).

- Both Webtop and Documentum Administrator do not work properly (at least, without additional configuration steps) if they are deployed in folders different than /path/to/tomcat/webapps/webtop and /path/to/tomcat/webapps/da, respectively.

Configuration of the S3-based File Store

A new functionality of OpenText Documentum 16.4 is its native support of S3-backed file stores. In order to use it, we create a new S3 bucket called fme-showcase-docstore2 as well as an IAM user for Documentum with programmatic access to AWS and a policy that allows access to the bucket and all its objects.

We then attach this policy to the Documentum Installation Owner account. The last step is to configure the File Store in the Documentum Administrator. For this, we select “File->New->S3 Store” in the Storage menu of DA and provided the URL of the bucket as well as access key ID and secret access key.

Pitfalls with the S3 stores:

- ACS should be active and configured correctly in order to use S3 stores. This can be achieved by ensuring that http://PUBLIC.IP.OF.CS:9080/ACS/servlet/ACS is provided as ACS URL.

- If a slash is provided at the end of the S3 bucket URL, the ACS will fail to connect to it.

- If some of the parameters were initially configured incorrectly, the ACS server should be restarted in order to work with the correct configuration.

Summary and Outlook

In the end, we have installed a complete OpenText Documentum environment on the AWS platform. The main components in the product line such as Webtop and RestAPI, Content Server and PostgreSQL run completely via Docker Containers on the AWS infrastructure.

Image 3: Final Setup

The scenario for the integration of OpenText Documentum on the AWS platform described in this blog article is deliberately kept simple in order to show the basic feasibility. This can be achieved relatively simple in just a few steps once the relevant pitfalls have been identified. The advantage of using the AWS platform lies in the fast and uncomplicated provision of the necessary infrastructure components.

We will supplement this scenario in further articles on topics such as container orchestration, security concepts and integration of further OpenText Documentum products. Feel free to contact us if you are interested in more information.

0 Comments